“Deep learning” is more than the latest buzz phrase. It’s a strategy which is set to vastly expand the range of intelligent tasks we can assign computers. What is it? In short, it’s the use of trainable layers of neural networks to recognize complex patterns in large sets of data—and to continually improve their own accuracy in doing so.

As a marketer, do you really need to know much about it? After all, you get the gist of machine learning. You know what an Amazon-type algorithm is. It’s enough, surely, to have the vague idea that deep learning is machine learning on steroids. Maybe so, and it’s certainly the case that understanding deep learning at anything more than a shallow level means taking a precipitous leap into difficult cognitive science concepts and—of course—advanced math.

But if you want to have a slightly better picture of what’s meant when yet another vendor tells you about a fabulous new deep learning-based solution, read on.

Basic Machine Learning

As we’ve said before, “machine learning applications run largely on ‘supervised learning’ algorithms that use historical data to predict future events.” The familiar example is indeed the Amazon.com algorithm which can predict future purchases based on transaction history, and which makes better predictions as the history (the data set) grows. The supervision element involves human eyes looking at sample outputs and making corrections (if she’s interested in “the Wars of the Roses,” offer history books, not gardening books) which enhance the learning process.

It’s fair to think of machine learning as being based on systems of rigid rules of the “if…then…” variety, combined with an ability to automatically improve the rules based on feedback.

Neural Networks

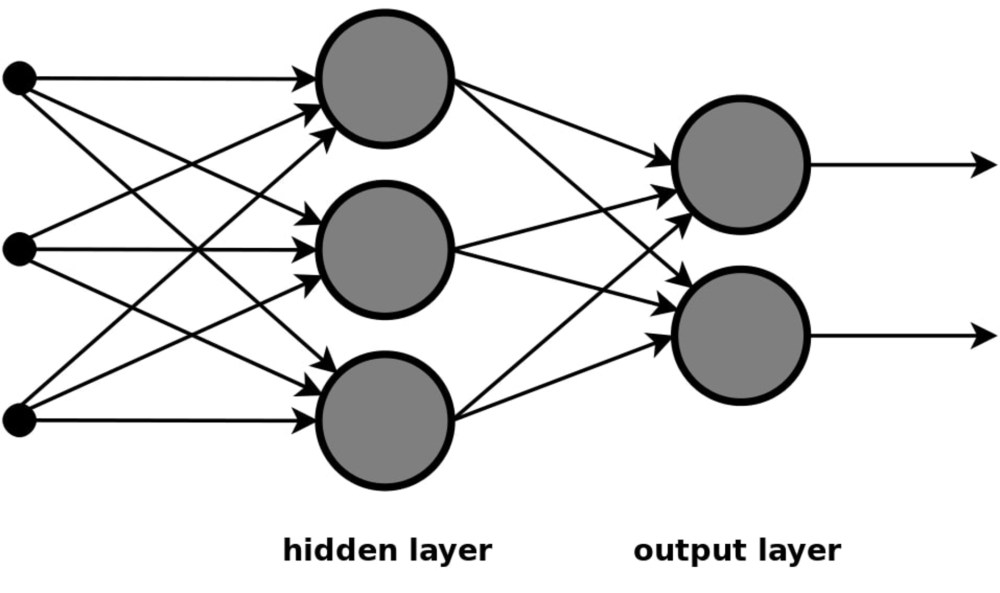

Don’t worry, we’re not dissecting frog brains here. A neural network is indeed a biological concept, but in the machine learning context it refers to a layer of artificial neurons (bits of math) which are activated by an input, communicate with each other about it, then produce an output. This is called “forward propagation.” As in traditional machine learning, the nodes get to find out how accurate the output was, and adjust their operations accordingly. This is called “back propagation” and results in the neurons being trained.

How many nodes are in a neural network layers? That depends on the complexity of the inputs, the amount of training data available, and…but wait, we’re not designing a network here. It’s the next step that’s interesting—the multiplication of what are known as the “hidden layers” between the input layer and the output layer. Think of these layers literally being stacked up: That’s simply why this kind of machine learning is called “deep.”

Deep Learning and Pattern Recognition

A stack of network layers just turns out to be that much better at recognizing patterns in the input data. This is something computers have traditionally been much worse at than human beings. Ask a computer to calculate Pi or predict the path of an asteroid through space and it’s off to the races. Ask it to tell a cat from a dog (let alone distinguish stylized cartoon versions) and suddenly it’s struggling to do something humans do really well.

Deep learning helps with pattern recognition, because each layer of neurons breaks down complex patterns into ever more simple patterns (and there’s that backpropagating training process going on too). Puzzling over this, I turned to Dan Kuster for a helpful analogy. Dr Kuster is a deep learning researcher at Indico, a cloud-based machine learning platform and data science solutions vendor, and I’d seen him discuss deep learning at the 2016 Sentiment Analysis Symposium last week.

Kuster suggested thinking of the layers as a series of templates. If you’re using just one template for a task, and it turns out to have serious shortcomings, you might need to make revisions which over-complicate it, or slow it down, or scrap it and develop a completely new template. The deep series of layered templates involved in deep learning act to inform and correct each others’ outputs. “If there’s no depth,” he said, “there’s no chance to learn patterns inside of patterns.”

And..So What for Marketers?

Deep learning, like machine learning in general, has broad application—indeed, it’s relevant to any field where we need computers not only to find patterns large quantities of data, but to train themselves to do a better job at it. It’s already being used, for example, to enhance facial and voice recognition solutions.

There’s little question, however, that it’s going to have a major impact on marketing activities which involve mining unstructured data for insights. According to Vishal Daga, CRO at Indico, use cases include analyzing social media data for “the voice of customer, how folks are talking to each other, mining speech for actionable insights.” There’s also targeting and personalization—”A lot of the signal is not available to traditional techniques.” Beyond targeting through demographics, deep learning means looking at “what I say and how I say it” to identify potential prospects. And although Indico is primarily text-focused, deep learning strategies can read sentiment and intent in images too—again, by identifying patterns.

What’s So New About It?

Okay, social data, sentiment analysis, personalization; isn’t everyone doing all that already? What’s the big deal? “The objectives aren’t new,” said Kuster, “but deep learning puts it all on fast forward.” Deep learning systems are setting new records for accuracy compared with traditional benchmarks. They’re also tackling more complex data mining tasks than searching for specific brand phrases or hash tags. With the ability to grasp complex patterns comes the the potential of identifying relevant customer sentiment in broader discussions, as well as in images and video.

Deep learning systems do, of course, need to be trained, and that remains perhaps the most significant hurdle when it comes to onboarding the technique. As Kuster says, they do best when they’re given “tons of examples.” Training a system from scratch can be time-consuming and expensive, but there is the possibility of taking a neural network with generic pre-training, and customize it by sprinkling brand- or topic-relevant examples on top. Indico calls this “transfer learning.”

And don’t forget the machine learning part of the deal. Yes, training of neural networks is often semi-supervised—in others words, human eyeballs guide elements of the training process. But for the most part, the networks improve their performance automatically, responding to the constant feedback loop—and they’re improving their performance when it comes to the spontaneous recognition of complex patterns, not just obeying simple heuristic rules.

It’s a big deal. If I haven’t convinced you, the series of videos beginning here is a relatively painless, if somewhat deeper, introduction: